“A woman without paint is like food without salt.”- Plautus

Early Uses

The first known uses of makeup appear in Egypt’s first dynasty around 3000 B.C. Archaeologists and historians discovered jars of makeup in ancient Egyptian tombs. Particularly, the use of kohl appeared most prevalent. Kohl, essentially a product of soot, acts to darken around the eyes. Both men and women play a part in the history and evolution of makeup in ancient times, as Egyptian men also used kohl to illuminate the eye.

Like Egypt, ancient Roman civilization also developed and consumed cosmetic products and remedies. For example, Plautus, a Roman philosopher living around 200 B.C., famously professed, “A woman without paint is like food without salt.” This statement represents both Roman ideals regarding beauty and somewhat accurately predicts the ideology makeup will represent globally in the future. Specifically, Romans also used kohl to darken the eyes, making them a predominant feature of the face. In addition to kohl, Romans used chalk to lighten the skin and rouge to redden the cheeks.

Later, during the European Middle Ages, makeup became a sign of affluence. Again, like the Romans, pale complexions represented beauty. However, here, pale skin indicated wealth as the rich did not attend to manual labor outdoors as lower social classes could not avoid natural elements due to their work. Despite the use of “lightening” makeup by the affluent, many women achieved this look by literally bleeding their faces. Similarly, Victorian notions of beauty emphasized the gentile appearance of fair complexions. However, Victorian women approached skin care differently than previous eras. While excessive makeup was largely discouraged, women drank vinegar and avoiding going outdoors to retain their pale complexions. http://www.fashion-era.com/make_up.htm#Victorian%20Delicacy

During the Regency Era in the United Kingdom, the era which bridges the Gregorian and Victorian eras, beauty became a central feature of femininity. During this period, beginning in the early 1800s, the concept of lightening skin became widespread. Light skin represented a life of leisure. Like, the Middle Ages, this era favored pale complexions, however increased technologies became available. For example, despite that many chemicals used in this process were lethal women, still used cosmetic products Many of these cosmetics contained white lead and mercury, which could prove fatal. During the 1800s many women used “belladonna”, a plant native to Western nations, to make their eyes appear more “luminous.” However, this substance is poisonous and continued to sell despite its fatal risk. Although often made by pharmacists in English apothecaries, these products contained mercury and nitric acid, both of which are poisonous. In addition to women’s use of cosmetic makeup, men also adorned their faces with a variety of products. Until the mid-1800s, men used makeup widely. For example, King George IV used makeup regularly, as it was a socially acceptable mode of dress. http://www.authorsden.com/visit/viewArticle.asp?id=15438

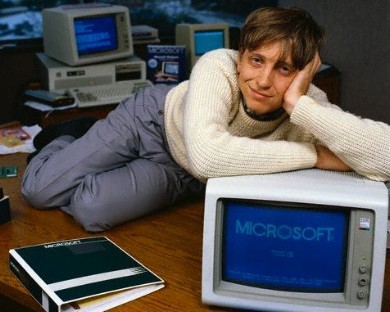

Twentieth Century

The beginning of the twentieth century marks a critical turning point in the cosmetic industry. Companies began to emerge resulting in mass production and subsequent consumption of cosmetics. For example, Maybelline and Max Factor introduced products to consumer market. Specifically, the 1920s marks the main ideological shift toward makeup and cosmetics. That is, through makeup, women displayed a sense of independence and freedom of expression. Sun-tanning became particularly notable in the 1920s as women began to favor tanner skin complexions celebrated by the famed, Coco Chanel. This trend led to the development of lotions and creams designed to protect the skin from the sun and natural elements.

In Cosmetics, an article published in 1938, H. H. Hazen an American physician provides an overview of cosmetic use from both a medical and social standpoint. Rouge, according to Hazen, consists of talcum, zinc oxide starch, chalk, dyes, wax and fat. Additionally, undesirable elements inherent in rouge include barium and lead. Lipsticks act to stick color onto the lip. Made of, waxes, fat and dyes, lipsticks act to dye the lips. Eyelash enhancers originally made from copper or lead ore still produced fatal consequences as late as 1938 when Dr. Hazen published this article. He notes that mascara consists of lamp black, petrolatum and a component of soap. However, numerous accounts of blindness and sever poisoning still remained recurred. http://www.jstor.org/pss/3413149

Theoretically, the inclusion of makeup as a societal norm, asks two questions. First, if makeup is in fact a technology, what is it for? That is to say, what is society progressing toward? Secondly, one must ask whom the technology is intended to progress through this technology. Specifically, who benefits from the technology and who is victim to its product remains critical. Inequalities of power result through the introduction of technologies, affecting seminal patterns of social existence. The evolving technological advancements in cosmetics and makeup represent evolving cultural ideologies including increasing globalization and ethnocentric outlooks. Technologies like cosmetic makeup ultimately act to overcome the human body.

In today’s society of mass consumption, the cosmetic industry has evolved through cultural influences that preclude the appearance of new technologies. For examples, today, cosmetic companies use clinical tests, emphasizing protection of consumers through the guise of “safety.” Although these products are no longer considered a luxury, the ideal of female beauty rests firmly on the cosmetic makeup industry.